Tutorial: Upload, access and explore your data in Azure Machine Learning

자습서: Azure ML에서 데이터 업로드, 액세스 및 탐색

- Article

- 07/05/2023

In this article

APPLIES TO:

Python SDK azure-ai-ml v2 (current)

In this tutorial you learn how to:

이 튜토리얼에서는 다음 방법을 배웁니다.

- 클라우드 스토리지에 데이터 업로드 Upload your data to cloud storage

- Azure ML 데이터 자산 만들기 Create an Azure Machine Learning data asset

- 대화형 개발을 위해 노트북의 데이터에 액세스 Access your data in a notebook for interactive development

- 새로운 버전의 데이터 자산 생성 Create new versions of data assets

기계학습 프로젝트 시작에는 일반적으로 탐색적 데이터분석(EDA), 데이터 전처리(정리, 기능 엔지니어링), 가설검증을 위한 기계학습모델 프로토타입 구축이 포함됩니다.

이 프로토타이핑 프로젝트 단계는 매우 상호작용적입니다.

Python 대화형 콘솔을 사용하여 IDE 또는 Jupyter 노트북에서 개발하는 데 적합합니다.

이 튜토리얼에서는 이러한 아이디어를 설명합니다.

The start of a machine learning project typically involves exploratory data analysis (EDA), data-preprocessing (cleaning, feature engineering), and the building of Machine Learning model prototypes to validate hypotheses. This prototyping project phase is highly interactive. It lends itself to development in an IDE or a Jupyter notebook, with a Python interactive console. This tutorial describes these ideas.

Prerequisites

전제 조건

- Azure ML을 사용하려면 먼저 작업장이 필요합니다. 없으면 만드세요 To use Azure Machine Learning, you'll first need a workspace. If you don't have one, complete

- 작업장 만드는데 필요한 리소스를 만들고, 사용방법을 배웁니다 Create resources you need to get started to create a workspace and learn more about using it.

- 스튜디오에 로그인하고 아직 열려 있지 않은 경우 작업장을 선택하세요 Sign in to studio and select your workspace if it's not already open.

- 작업장에서 노트북을 열거나 생성합니다. Open or create a notebook in your workspace:

- 코드를 셀에 복사/붙여넣으려면, 새 노트북을 만드세요. Create a new notebook, if you want to copy/paste code into cells.

- 또는 스튜디오의 샘플 섹션에서 tutorials/get-started-notebooks/explore-data.ipynb를 엽니다. 그런 다음 Clone를 선택하여 노트북을 Files에 추가합니다. (샘플을 찾을 수 있는 위치를 확인) Or, open tutorials/get-started-notebooks/explore-data.ipynb from the Samples section of studio. Then select Clone to add the notebook to your Files. (See where to find Samples.)

-

커널 설정 Set your kernel

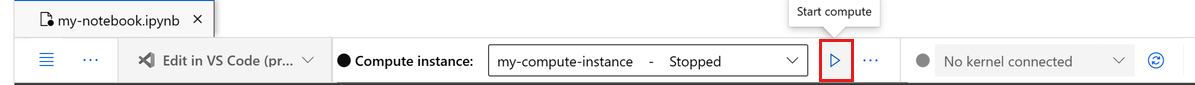

- On the top bar above your opened notebook, create a compute instance if you don't already have one.

- If the compute instance is stopped, select Start compute and wait until it is running.

- Make sure that the kernel, found on the top right, is Python 3.10 - SDK v2. If not, use the dropdown to select this kernel.

- If you see a banner that says you need to be authenticated, select Authenticate.

Important

The rest of this tutorial contains cells of the tutorial notebook. Copy/paste them into your new notebook, or switch to the notebook now if you cloned it.

이 교본에서 사용할 데이터 다운로드

Download the data used in this tutorial

데이터수집의 경우 Azure Data Explorer는 이러한 형식의 원시 데이터를 처리합니다.

이 교본에서는 CSV 형식의 신용카드 클라이언트 데이터샘플을 사용합니다.

Azure ML 리소스에서 진행 단계를 확인합니다.

해당 리소스에서 이 노트북이 있는 폴더 바로 아래에 제안된 데이터 이름을 사용하여 로컬 폴더를 만듭니다.

For data ingestion, the Azure Data Explorer handles raw data in these formats. This tutorial uses this CSV-format credit card client data sample. We see the steps proceed in an Azure Machine Learning resource. In that resource, we'll create a local folder with the suggested name of data directly under the folder where this notebook is located.

Note

이 자습서는 Azure Machine Learning 리소스 폴더 위치에 있는 데이터에 따라 달라집니다.

이 자습서에서 '로컬'은 해당 Azure Machine Learning 리소스의 폴더 위치를 의미합니다.

This tutorial depends on data placed in an Azure Machine Learning resource folder location. For this tutorial, 'local' means a folder location in that Azure Machine Learning resource.

- Select Open terminal below the three dots, as shown in this image:

-

- The terminal window opens in a new tab.

- Make sure you cd to the same folder where this notebook is located. For example, if the notebook is in a folder named get-started-notebooks:

- cd get-started-notebooks # modify this to the path where your notebook is located

- Enter these commands in the terminal window to copy the data to your compute instance:

- mkdir data cd data # the sub-folder where you'll store the data wget https://azuremlexamples.blob.core.windows.net/datasets/credit_card/default_of_credit_card_clients.csv

- You can now close the terminal window.

Learn more about this data on the UCI Machine Learning Repository.

작업장 가기 위한 핸들 생성

Create handle to workspace

코드를 살펴보기 전, 작업장을 참조할 수 있는 방법이 필요합니다.

작업장에 대한 핸들을 위해 ml_client를 생성합니다.

그런 다음 ml_client를 사용하여 리소스와 작업을 관리합니다.

다음 셀에, 구독 ID, 리소스 그룹 이름, 작업장 이름을 입력합니다.

이러한 값을 찾으려면 다음을 수행하십시오.

Before we dive in the code, you need a way to reference your workspace. You'll create ml_client for a handle to the workspace. You'll then use ml_client to manage resources and jobs.

In the next cell, enter your Subscription ID, Resource Group name and Workspace name. To find these values:

- 오른쪽 위 Azure Machine Learning Studio 도구 모음에서 작업장 이름을 선택합니다. In the upper right Azure Machine Learning studio toolbar, select your workspace name.

- 작업장, 리소스 그룹 및 구독 ID 값을 코드에 복사합니다. Copy the value for workspace, resource group and subscription ID into the code.

- 하나의 값을 복사하고 해당 영역을 닫고 붙여넣은 후 다음 값을 위해 다시 돌아와야 합니다. You'll need to copy one value, close the area and paste, then come back for the next one.

Python

from azure.ai.ml import MLClient from azure.identity import DefaultAzureCredential from azure.ai.ml.entities import Data from azure.ai.ml.constants import AssetTypes # authenticate credential = DefaultAzureCredential() # Get a handle to the workspace ml_client = MLClient( credential=credential, subscription_id="<SUBSCRIPTION_ID>", resource_group_name="<RESOURCE_GROUP>", workspace_name="<AML_WORKSPACE_NAME>", )

Note

Creating MLClient will not connect to the workspace. The client initialization is lazy, it will wait for the first time it needs to make a call (this will happen in the next code cell).

Upload data to cloud storage

Azure Machine Learning은 클라우드의 저장소 위치를 가리키는 URI(Uniform Resource Identifier)를 사용합니다.

URI를 사용하면 노트북 및 작업의 데이터에 쉽게 액세스할 수 있습니다.

데이터 URI 형식은 웹페이지에 액세스하기 위해 웹브라우저에서 사용하는 웹 URL과 유사합니다.

예를 들어:

Azure Machine Learning uses Uniform Resource Identifiers (URIs), which point to storage locations in the cloud. A URI makes it easy to access data in notebooks and jobs. Data URI formats look similar to the web URLs that you use in your web browser to access web pages. For example:

- Access data from public https server: https://<account_name>.blob.core.windows.net/<container_name>/<folder>/<file>

- Access data from Azure Data Lake Gen 2: abfss://<file_system>@<account_name>.dfs.core.windows.net/<folder>/<file>

Azure Machine Learning 데이터자산은 웹브라우저 책갈피(즐겨찾기)와 유사합니다.

가장 자주 사용되는 데이터를 가리키는 긴 스토리지경로(URI)를 기억하는 대신 데이터자산을 생성한 다음 친숙한 이름으로 해당 자산에 액세스할 수 있습니다.

데이터자산을 생성하면 해당 메타데이터 복사본과 함께 데이터 소스 위치에 대한 참조도 생성됩니다.

데이터가 기존 위치에 유지되므로 추가 스토리지 비용이 발생하지 않으며 데이터 원본 무결성이 손상될 위험이 없습니다.

Azure Machine Learning 데이터저장소, Azure Storage, 공용 URL 및 로컬파일에서 데이터 자산을 만들 수 있습니다.

An Azure Machine Learning data asset is similar to web browser bookmarks (favorites). Instead of remembering long storage paths (URIs) that point to your most frequently used data, you can create a data asset, and then access that asset with a friendly name.

Data asset creation also creates a reference to the data source location, along with a copy of its metadata. Because the data remains in its existing location, you incur no extra storage cost, and don't risk data source integrity. You can create Data assets from Azure Machine Learning datastores, Azure Storage, public URLs, and local files.

Tip

소규모 데이터 업로드의 경우 Azure Machine Learning 데이터자산 생성은 로컬 컴퓨터 리소스에서 클라우드 스토리지로의 데이터 업로드에 적합합니다.

이 접근방식을 사용하면 추가 도구나 유틸리티가 필요없습니다.

그러나 더 큰 크기의 데이터 업로드에는 azcopy 같은 전용도구나 유틸리티가 필요할 수 있습니다.

azcopy 명령줄 도구는 Azure Storage 간에 데이터를 이동합니다.

For smaller-size data uploads, Azure Machine Learning data asset creation works well for data uploads from local machine resources to cloud storage. This approach avoids the need for extra tools or utilities. However, a larger-size data upload might require a dedicated tool or utility - for example, azcopy. The azcopy command-line tool moves data to and from Azure Storage.

Learn more about azcopy here.

여기에서 azcopy에 대해 자세히 알아보세요.

다음 노트북 셀은 데이터자산을 생성합니다.

코드 샘플은 원시 데이터파일을 지정된 클라우드 스토리지 리소스에 업로드합니다.

데이터자산을 생성할 때마다 고유한 버전이 필요합니다.

버전이 이미 존재하는 경우 오류가 발생합니다.

이 코드에서는 데이터의 첫째 읽기에 "initial"를 사용하고 있습니다.

해당 버전이 이미 존재하는 경우 다시 생성을 건너뜁니다.

The next notebook cell creates the data asset. The code sample uploads the raw data file to the designated cloud storage resource.

Each time you create a data asset, you need a unique version for it. If the version already exists, you'll get an error. In this code, we're using the "initial" for the first read of the data. If that version already exists, we'll skip creating it again.

version 매개변수를 생략할 수도 있으며, 1부터 시작하여 증가하는 버전 번호가 자동으로 생성됩니다.

이 튜토리얼에서는 첫 번째 버전으로 "initial"이라는 이름을 사용합니다.

프로덕션 기계학습 파이프라인 생성 튜토리얼도 이 버전의 데이터를 사용하므로 여기서는 해당 튜토리얼에서 다시 볼 수 있는 값을 사용합니다.

You can also omit the version parameter, and a version number is generated for you, starting with 1 and then incrementing from there.

In this tutorial, we use the name "initial" as the first version. The Create production machine learning pipelines tutorial will also use this version of the data, so here we are using a value that you'll see again in that tutorial.

Python

from azure.ai.ml.entities import Data from azure.ai.ml.constants import AssetTypes # update the 'my_path' variable to match the location of where you downloaded the data on your # local filesystem my_path = "./data/default_of_credit_card_clients.csv" # set the version number of the data asset v1 = "initial" my_data = Data( name="credit-card", version=v1, description="Credit card data", path=my_path, type=AssetTypes.URI_FILE, ) ## create data asset if it doesn't already exist: try: data_asset = ml_client.data.get(name="credit-card", version=v1) print( f"Data asset already exists. Name: {my_data.name}, version: {my_data.version}" ) except: ml_client.data.create_or_update(my_data) print(f"Data asset created. Name: {my_data.name}, version: {my_data.version}")

You can see the uploaded data by selecting Data on the left. You'll see the data is uploaded and a data asset is created:

This data is named credit-card, and in the Data assets tab, we can see it in the Name column. This data uploaded to your workspace's default datastore named workspaceblobstore, seen in the Data source column.

An Azure Machine Learning datastore is a reference to an existing storage account on Azure. A datastore offers these benefits:

- A common and easy-to-use API, to interact with different storage types (Blob/Files/Azure Data Lake Storage) and authentication methods.

- An easier way to discover useful datastores, when working as a team.

- In your scripts, a way to hide connection information for credential-based data access (service principal/SAS/key).

Access your data in a notebook

Pandas directly support URIs - this example shows how to read a CSV file from an Azure Machine Learning Datastore:

import pandas as pd df = pd.read_csv("azureml://subscriptions/<subid>/resourcegroups/<rgname>/workspaces/<workspace_name>/datastores/<datastore_name>/paths/<folder>/<filename>.csv")

However, as mentioned previously, it can become hard to remember these URIs. Additionally, you must manually substitute all <substring> values in the pd.read_csv command with the real values for your resources.

You'll want to create data assets for frequently accessed data. Here's an easier way to access the CSV file in Pandas:

Important

In a notebook cell, execute this code to install the azureml-fsspec Python library in your Jupyter kernel:

Python

%pip install -U azureml-fsspec

Python

import pandas as pd # get a handle of the data asset and print the URI data_asset = ml_client.data.get(name="credit-card", version=v1) print(f"Data asset URI: {data_asset.path}") # read into pandas - note that you will see 2 headers in your data frame - that is ok, for now df = pd.read_csv(data_asset.path) df.head()

Read Access data from Azure cloud storage during interactive development to learn more about data access in a notebook.

Create a new version of the data asset

You might have noticed that the data needs a little light cleaning, to make it fit to train a machine learning model. It has:

- two headers

- a client ID column; we wouldn't use this feature in Machine Learning

- spaces in the response variable name

Also, compared to the CSV format, the Parquet file format becomes a better way to store this data. Parquet offers compression, and it maintains schema. Therefore, to clean the data and store it in Parquet, use:

Python

# read in data again, this time using the 2nd row as the header df = pd.read_csv(data_asset.path, header=1) # rename column df.rename(columns={"default payment next month": "default"}, inplace=True) # remove ID column df.drop("ID", axis=1, inplace=True) # write file to filesystem df.to_parquet("./data/cleaned-credit-card.parquet")

This table shows the structure of the data in the original default_of_credit_card_clients.csv file .CSV file downloaded in an earlier step. The uploaded data contains 23 explanatory variables and 1 response variable, as shown here:

|

Column Name(s)

|

Variable Type

|

Description

|

|

X1

|

Explanatory

|

Amount of the given credit (NT dollar): it includes both the individual consumer credit and their family (supplementary) credit.

|

|

X2

|

Explanatory

|

Gender (1 = male; 2 = female).

|

|

X3

|

Explanatory

|

Education (1 = graduate school; 2 = university; 3 = high school; 4 = others).

|

|

X4

|

Explanatory

|

Marital status (1 = married; 2 = single; 3 = others).

|

|

X5

|

Explanatory

|

Age (years).

|

|

X6-X11

|

Explanatory

|

History of past payment. We tracked the past monthly payment records (from April to September 2005). -1 = pay duly; 1 = payment delay for one month; 2 = payment delay for two months; . . .; 8 = payment delay for eight months; 9 = payment delay for nine months and above.

|

|

X12-17

|

Explanatory

|

Amount of bill statement (NT dollar) from April to September 2005.

|

|

X18-23

|

Explanatory

|

Amount of previous payment (NT dollar) from April to September 2005.

|

|

Y

|

Response

|

Default payment (Yes = 1, No = 0)

|

Next, create a new version of the data asset (the data automatically uploads to cloud storage). For this version, we'll add a time value, so that each time this code is run, a different version number will be created.

Python

from azure.ai.ml.entities import Data from azure.ai.ml.constants import AssetTypes import time # Next, create a new *version* of the data asset (the data is automatically uploaded to cloud storage): v2 = "cleaned" + time.strftime("%Y.%m.%d.%H%M%S", time.gmtime()) my_path = "./data/cleaned-credit-card.parquet" # Define the data asset, and use tags to make it clear the asset can be used in training my_data = Data( name="credit-card", version=v2, description="Default of credit card clients data.", tags={"training_data": "true", "format": "parquet"}, path=my_path, type=AssetTypes.URI_FILE, ) ## create the data asset my_data = ml_client.data.create_or_update(my_data) print(f"Data asset created. Name: {my_data.name}, version: {my_data.version}")

The cleaned parquet file is the latest version data source. This code shows the CSV version result set first, then the Parquet version:

Python

import pandas as pd # get a handle of the data asset and print the URI data_asset_v1 = ml_client.data.get(name="credit-card", version=v1) data_asset_v2 = ml_client.data.get(name="credit-card", version=v2) # print the v1 data print(f"V1 Data asset URI: {data_asset_v1.path}") v1df = pd.read_csv(data_asset_v1.path) print(v1df.head(5)) # print the v2 data print( "_____________________________________________________________________________________________________________\n" ) print(f"V2 Data asset URI: {data_asset_v2.path}") v2df = pd.read_parquet(data_asset_v2.path) print(v2df.head(5))

Clean up resources

If you plan to continue now to other tutorials, skip to Next steps.

Stop compute instance

If you're not going to use it now, stop the compute instance:

- In the studio, in the left navigation area, select Compute.

- In the top tabs, select Compute instances

- Select the compute instance in the list.

- On the top toolbar, select Stop.

Delete all resources

Important

The resources that you created can be used as prerequisites to other Azure Machine Learning tutorials and how-to articles.

If you don't plan to use any of the resources that you created, delete them so you don't incur any charges:

- In the Azure portal, select Resource groups on the far left.

- From the list, select the resource group that you created.

- Select Delete resource group.

- Enter the resource group name. Then select Delete.

Next steps

Read Create data assets for more information about data assets.

Read Create datastores to learn more about datastores.

Continue with tutorials to learn how to develop a training script.

Model development on a cloud workstation

Feedback

Submit and view feedback for

댓글